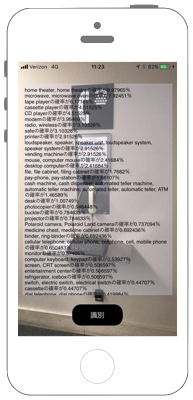

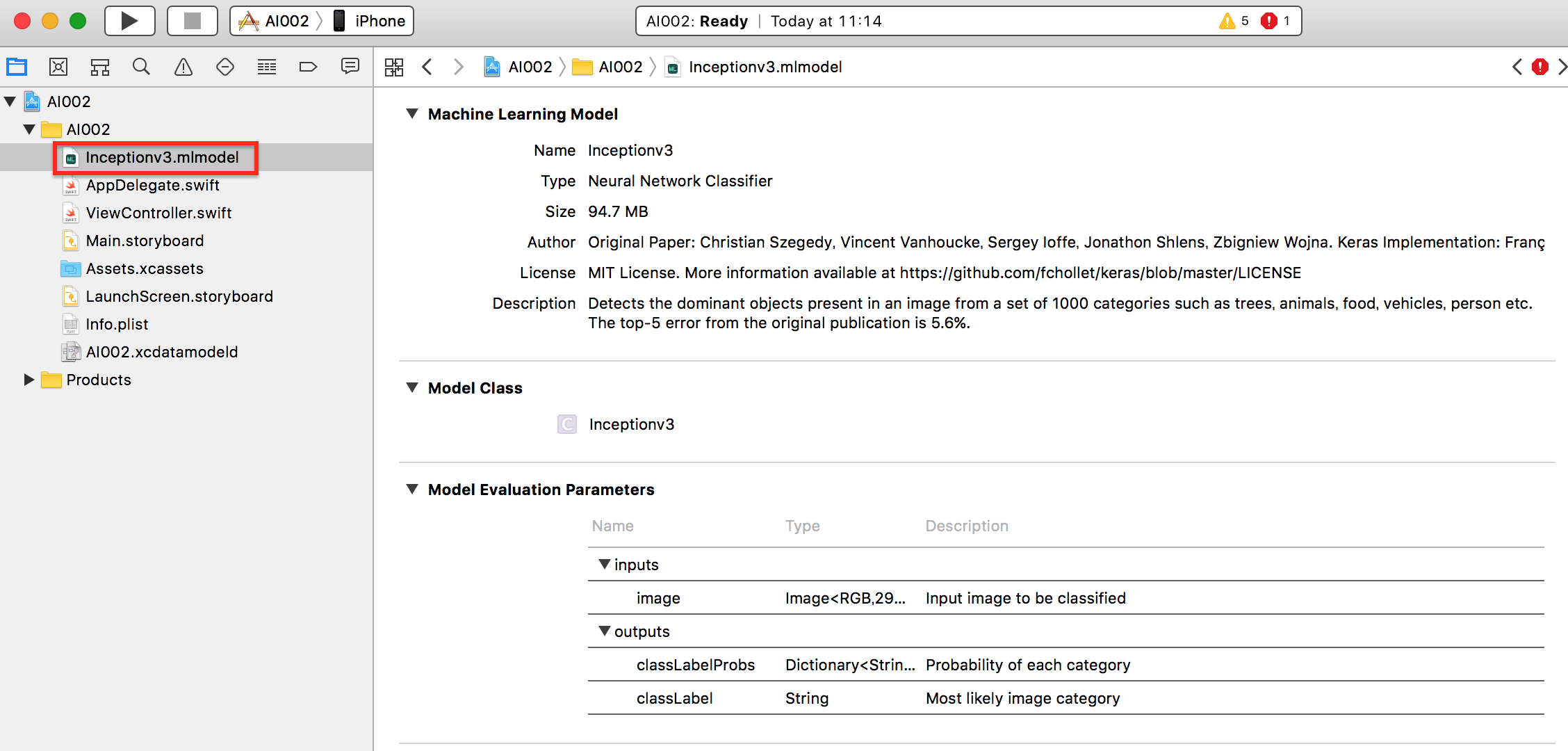

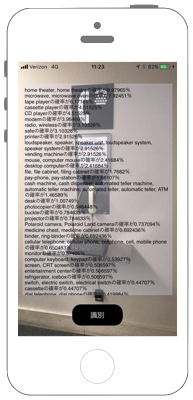

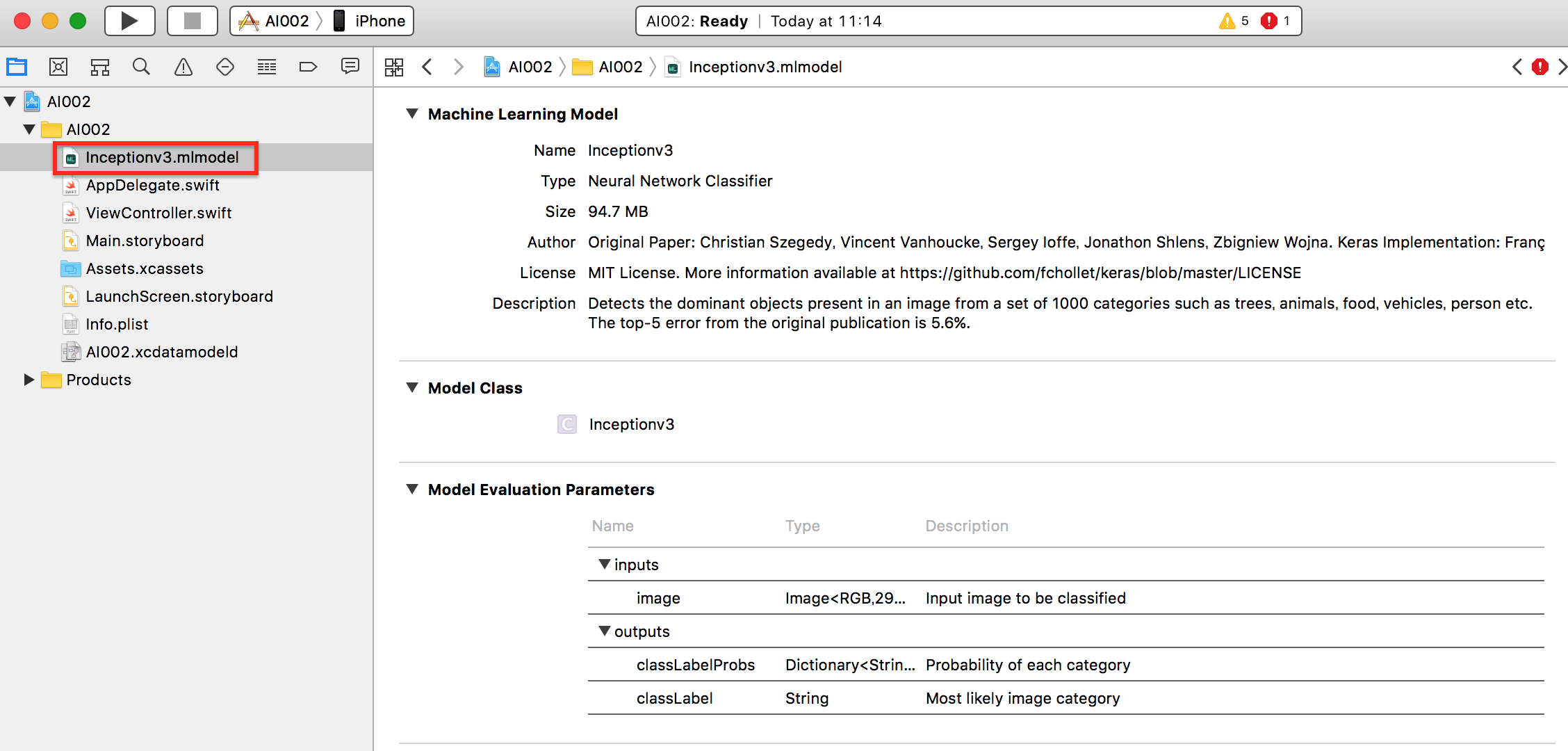

Inceptionv3で物体の判定

Model

Inceptionv3

事前準備

- iOS11

- XCode9

Privacy - Camera Usage Description をInfo.plistに追加する

Swift 4.0

import UIKit

import AVFoundation

import Vision

class ViewController: UIViewController {

var mySession : AVCaptureSession!

var myDevice : AVCaptureDevice!

var myImageOutput: AVCaptureStillImageOutput!

var myAiTextView: UITextView!

override func viewDidLoad() {

super.viewDidLoad()

mySession = AVCaptureSession()

let devices = AVCaptureDevice.devices()

for device in devices {

if(device.position == AVCaptureDevice.Position.back){

myDevice = device as! AVCaptureDevice

}

}

let videoInput = try! AVCaptureDeviceInput.init(device: myDevice)

mySession.addInput(videoInput)

myImageOutput = AVCaptureStillImageOutput()

mySession.addOutput(myImageOutput)

let myVideoLayer = AVCaptureVideoPreviewLayer.init(session: mySession)

myVideoLayer.frame = self.view.bounds

myVideoLayer.videoGravity = AVLayerVideoGravity.resizeAspectFill

self.view.layer.addSublayer(myVideoLayer)

mySession.startRunning()

let myButton = UIButton(frame: CGRect(x: 0, y: 0, width: 120, height: 50))

myButton.backgroundColor = UIColor.black

myButton.layer.masksToBounds = true

myButton.setTitle("識別", for: .normal)

myButton.layer.cornerRadius = 20.0

myButton.layer.position = CGPoint(x: self.view.bounds.width/2, y:self.view.bounds.height-50)

myButton.addTarget(self, action: #selector(onClickMyButton), for: .touchUpInside)

self.view.addSubview(myButton);

myAiTextView = UITextView(frame: CGRect(x:10, y:50, width:self.view.frame.width - 20, height:500))

myAiTextView.backgroundColor = UIColor(red: 0.9, green: 0.9, blue: 1, alpha: 0.3)

myAiTextView.text = ""

self.view.addSubview(myAiTextView);

}

@objc func onClickMyButton(sender: UIButton){

let myVideoConnection = myImageOutput.connection(with: AVMediaType.video)

self.myImageOutput.captureStillImageAsynchronously(from: myVideoConnection!, completionHandler:

{(imageDataBuffer, error) in

if let e = error {

print(e.localizedDescription)

return

}

let myImageData = AVCapturePhotoOutput.jpegPhotoDataRepresentation(forJPEGSampleBuffer: imageDataBuffer!, previewPhotoSampleBuffer: nil)

let myImage = UIImage(data: myImageData!)

self.recognition(image: myImage!)

})

}

func recognition(image: UIImage) {

let model = try! VNCoreMLModel(for: Inceptionv3().model)

let request = VNCoreMLRequest(model: model, completionHandler: self.myAIResult)

let handler = VNImageRequestHandler(cgImage:image.cgImage!)

guard (try? handler.perform([request])) != nil else {

fatalError("Error on model")

}

}

func myAIResult(request: VNRequest, error: Error?) {

guard let results = request.results as? [VNClassificationObservation] else {

fatalError("Results Error")

}

var result = ""

for classification in results {

result += "\(classification.identifier)の確率が\(classification.confidence * 100)%\n"

}

myAiTextView.text = result

}

}

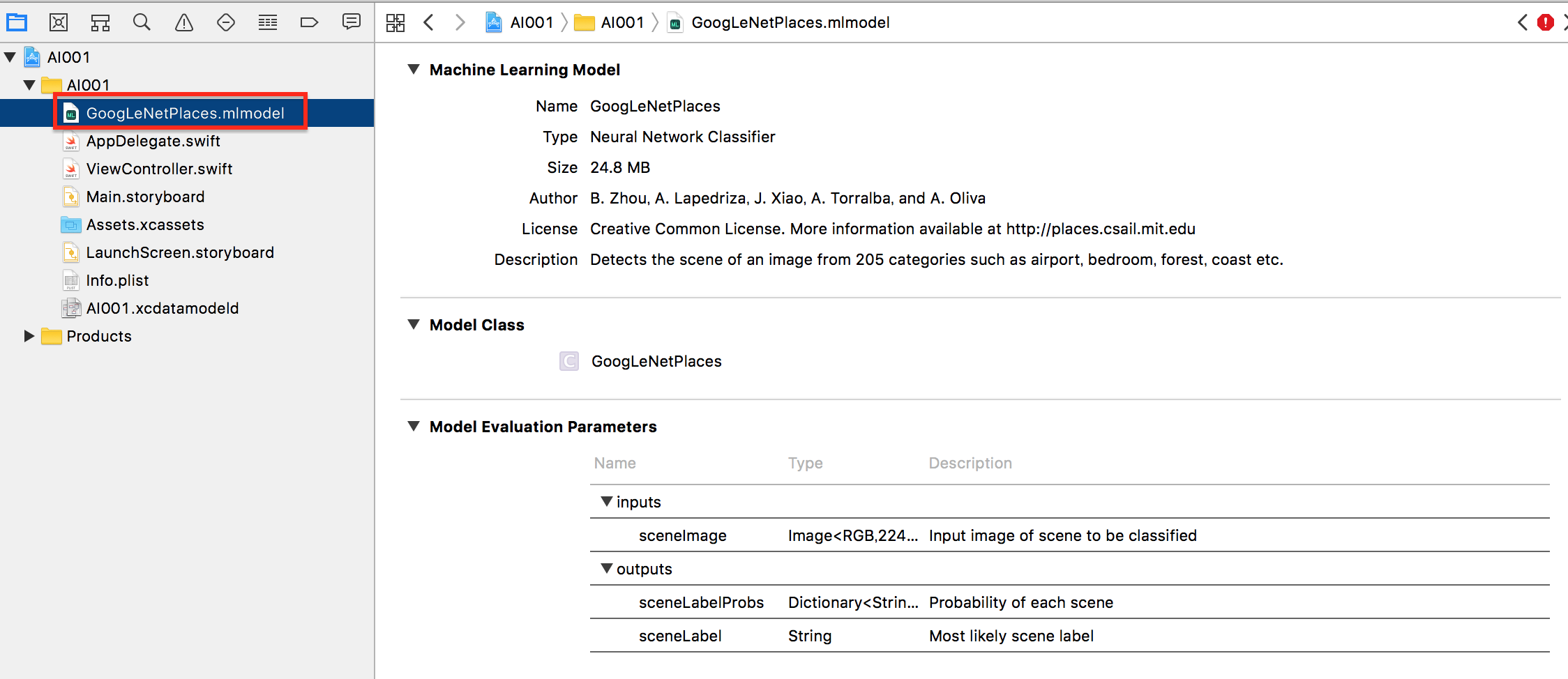

Reference